Locks and Security News: your weekly locks and security industry newsletter

2nd July 2025 Issue no. 760

Your industry news - first

We strongly recommend viewing Locks and Security News full size in your web browser. Click our masthead above to visit our website version.

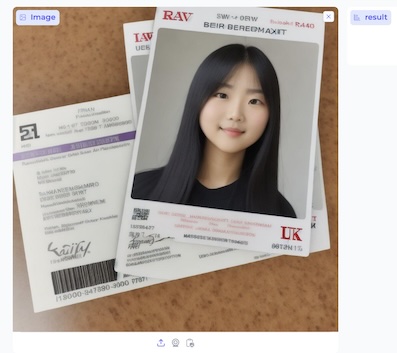

Deepfake experts Sumsub’s guide to spotting AI deepfakes

2024 is the year of the deepfake - with increasingly realistic, AI-powered deepfakes already impacting the biggest election year in history. The UK has not been spared this malicious influence, as Sumsub’s data shows that the UK is currently the seventh most targeted nation in the world and third nation in Europe.

2024 is the year of the deepfake - with increasingly realistic, AI-powered deepfakes already impacting the biggest election year in history. The UK has not been spared this malicious influence, as Sumsub’s data shows that the UK is currently the seventh most targeted nation in the world and third nation in Europe.

Generally speaking, social media platforms aren’t doing enough to tackle the spread of misinformation, with the exception of TikTok, which now watermarks all AI content. Likewise, it’s likely too late for governments to effectively regulate deepfakes and other misinformation in time to spare elections from malicious influence.

Therefore, users are the last line of defence - and should know the tell-tale signs which could give away artificially generated pictures, videos, and audio clips. Here are Sumsub’s key things to look out for:

- Unnatural and uneven movement, particularly in the hands or lips.

- Changes in lighting, unnatural textures, or digital artefacts in the background or skin tones.

- Unusual blinking patterns and poor synchronisation of lip movements and throat or Adam’s Apple with speech.

To avoid manipulation of your image for potential fraud attempts:

-

Be cautious about sharing personal photos online. You can limit the number of personal photos you share on social media, or adjust privacy settings to restrict who can access your photos

-

Avoid sharing high-resolution, unedited photos that could be easily manipulated

-

Thoroughly read the terms and conditions of all AI apps, ensuring they cannot use your photos without your permission

-

Utilise smart technology. While AI may pose danger, it’s also a remedy. AI can be used to detect certain visual or audio artefacts in deepfakes that are absent in authentic media.

Pavel Goldman-Kalaydin, Head of AI & ML at Sumsub, comments: “Unfortunately, social media and news platforms lack adequate technology to differentiate between downloaded, generated, or original content, posing a significant risk of enabling the spread of disinformation - as well as risks to both personal reputation and financial security.

“Users should prioritise verifying the source of information and distinguishing between trusted, reliable media and content from unknown users. Even content shared by friends or reputable media outlets can be deepfakes, as demonstrated by last year's notable spoofs, such as the Pope wearing Balenciaga, which duped the media. Therefore, it's crucial to scrutinise details in images or videos - as well as the context.

“If any of the following features are detected - unnatural hand or lip movement, artificial background, uneven movement, changes in lighting, differences in skin tones, unusual blinking patterns, poor synchronisation of lip movements with speech, or digital artefacts - the content is likely generated.

“While our primary focus is on how these techniques affect businesses and users during verification processes, the main use of deepfakes currently is to create non-consensual adult content or other damaging content spread later in social media.

“Technology will continue to advance rapidly, and soon it will be impossible for the human eye to detect deepfakes without dedicated detection technologies. So we recently released For Fake's Sake, a set of models enabling the detection of deepfakes and synthetic fraud in visual assets. It is the first solution of its kind to be developed by a verification provider and made available to all for free download and use.”

- Countries with the most deepfakes detected in Q1 2024 are China, Spain, Germany, Ukraine, the US, Vietnam, and the UK.

- The number of deepfakes detected in the UK increased by 45% from Q4 2023 to Q1 2023.

- The highest YoY increase in deepfakes is: Bulgaria (3000%), Portugal (1700%), Belgium (800%), Spain (191%), Germany (142%), and France (97%).

3rd July 2024